on October 25, 2025

Prompting is simply the act of writing a query or instruction in natural language and sending it to a language model like GPT. The model then interprets the text, processes it using tokens, and generates a response in the form of new tokens.

In simple terms:

You write a text-based instruction or question, the model breaks it into tokens, and gives you a response that’s prompting.

Your prompt can be:

- A simple phrase

- A detailed question

- Or a well-crafted instruction with examples

The clarity, detail, and structure of your prompt directly impact the quality of the AI’s response.

Good prompts shape good AI outputs.

This is why prompt crafting is such an important part of working with LLMs and the art of refining your prompts to get better results is known as Prompt Engineering.

Why Prompting Matters

Even though you don’t need to know the internal architecture of an LLM to use it, a basic understanding helps you write better prompts.

LLMs are built on NLP (Natural Language Processing) and Machine Learning, which allow them to understand your input and generate human-like responses. But how helpful or accurate the response is depends a lot on your prompt.

Here’s what I’ve learned:

- Your prompt is your conversation.

- The way you frame, refine, and expand your prompt affects whether you get a vague answer or a deep, insightful one.

- It’s important to provide context, be specific, and build on the conversation.

Types of Prompting (with My Examples)

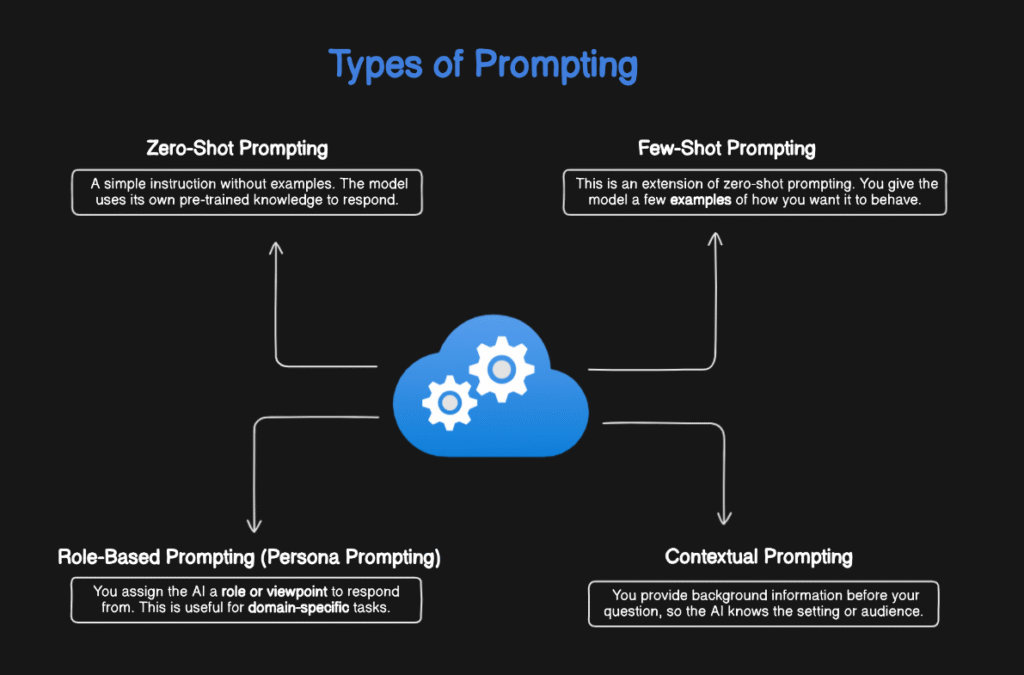

Here are some key types of prompting I’ve learned and experimented with and I have given examples of these types in the below image.

1️. Zero-Shot Prompting

A simple instruction without examples. The model uses its own pre-trained knowledge to respond

2️. Few-Shot Prompting

This is an extension of zero-shot prompting. You give the model a few examples of how you want it to behave.

3. Chain of Thought

The model is encouraged to break down reasoning step by step before arriving at answer.

4. Role-Based Prompting (Persona Prompting)

You assign the AI a role or viewpoint to respond from. This is useful for domain-specific tasks.

5. Contextual Prompting

You provide background information before your question, so the AI knows the setting or audience.

Final Thoughts

To learn Generative AI effectively, you don’t have to go too deep into the technical internals of LLMs but you do need to understand the importance of prompting.

Key takeaway:

- Always think about context

- Be clear and specific

- And don’t be afraid to refine and iterate your prompts

insightful, Thank you for sharing.

Pingback: Unlocking the Power of Generative AI Application Development